The minute I found one of Refik Anadol's creations in the basement of Namur's marvelous theater hosting the kick festival I fell in love with his art. Since then I was eager to try on recreating works from his machine hallucination series. Years later I got into procedural CAD modeling and discovered the software HOUDINI from SIDEFX as a great tool for generative design. By default you can find a built-in function called FLIP TANK, that simulates fluid mechanics - the framework reminded me a lot of Anadol's work. So did he do it like that?

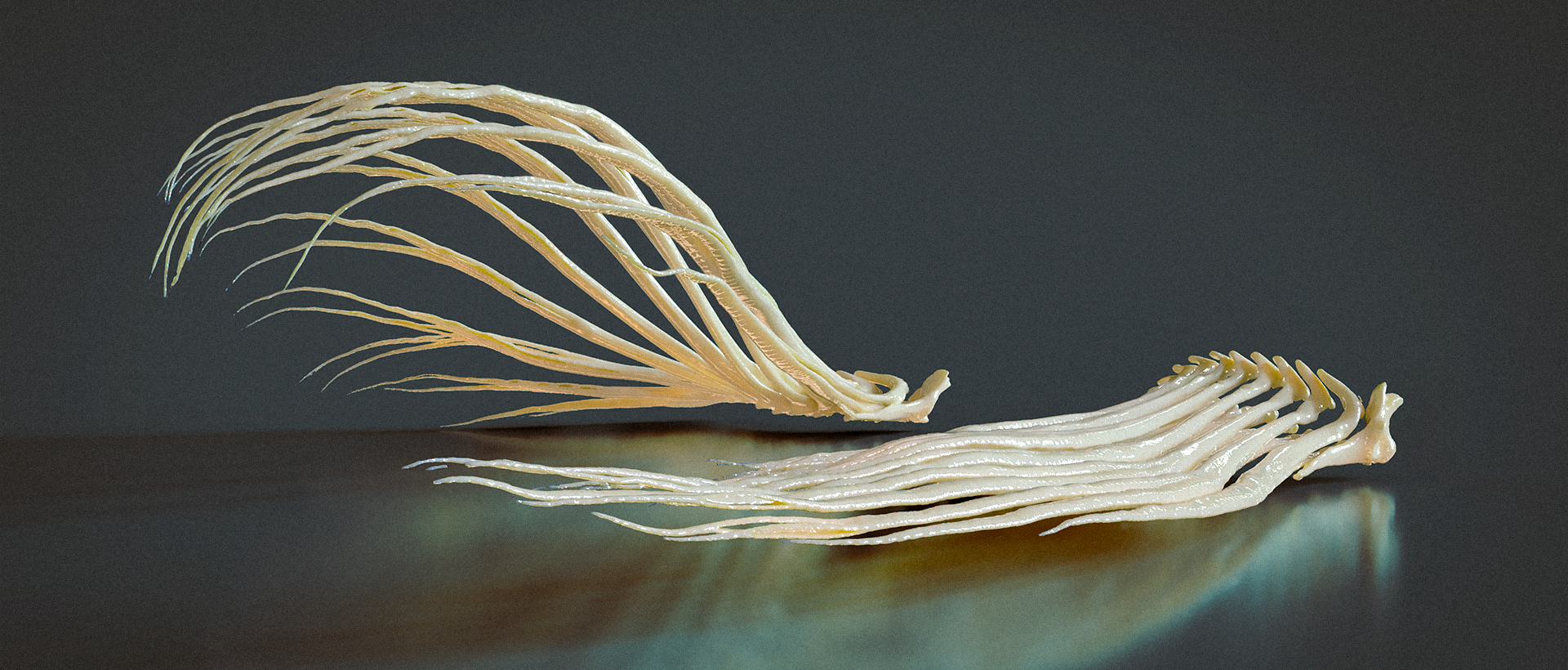

One of my first creations made with around 800.000 particles that splash out of a random white box.

As I perceive Anadol's pipeline it consists of two major components that can be developed seperately depending on how much time you want to spend on either the machine learning or the visual effects part of the things. So here are the components:

1. First one is an AI-generated video in which pictures fluently morph into each other. The AI generates an interpolation of the learned input data and sort of animates the dataset as a motion picture. A great abstract interpolation that will serve as what I call the back-end of the art piece. Regarding the choice of neural network I believe that Anadol and his team used a STYLE-based generator architecture like the original NVIDIA StyleGAN framework to facilitate their video generation. This could result in something like this:

For the artwork named "Coral Dreams" - which was by the way sold for about 851.130,00 $ - the team fed such a neural network with 1,742,772 (!) curated "[...] images of coral/reef found on publicly available image databases" [2]. A tensorflow implementation of StyleGAN 2 can be found here [3]. The internet is filled with large open-source image datasets that you can use for training the model. So if you are super motivated you can create your very own video interpolation. In this tutorial I will just use one interpolation video of the artist @esarthouse8183 (This person creates lots of wonderful AI videos).

2. Part two is the what I call the front-end of the artwork. A particle model simulating the behaviour of a fluid being whirled around in a tank. This creates the funky dots splashing to all directions for us. But now comes the clever part. The particles aren't accelerated by attacking physical forces. They move because the brightness and color information of the StyleGAN-video is fed as a force input into the simulation.

A flip tank with lots of particles. The function is built into the VFX software Houdini by default. The box acts kind of as a bounding box that the particle can´t pass - just like an aquarium. This box limit can actually also be observed in some of Anadol artworks.

If you know your way around in Houdini this particle simulation will be an easy one to setup. My simple pipeline just consists of a DOPNET containing a FLIP solver that will be fed by the following inputs: A FLIP object, which is basically just a box (10,0.5,10) full of our fluidic particles in their starting configuration. Then there is a POPVOP, where we can give attributes to the particles in our tank.

To adjust the amount of particles you need to access the FLIP object settings. For me the following values worked quite well: Particle separation: 0.03, particle radius scale: 0.5, grid scale: 3. When you decrease the particle separation Houdini will simply add more particles to fill the gaps - this results in a more dense and realistic looking fluid. Be very careful with the particle separation as it can get very computationally expensive when the separation is to small.

The modifications of the POPVOP are also relatively straight forward. You basically just need to remap the pixel color information of one video frame from our AI interpolation video to the X/Y-position of our particle grid. You can achieve this with the following node pipeline.

With the colormap node we can then point to our file path of the interpolation video and make the frame count dependend on the time elapsed. This way the color of the particles is permanently changing. The other modification is that we feed the color information CLR into our FORCE input of our GEOMETRYVOPOUTPUT. By multiplying this color information with an integer you can adjust the force intensity. Furthermore the density and viscosity of the fluid need to be adjustable so that our fluid behaves a bit like lava. This can be done by placing a POPWRANGLE as an input of the POPVOP with two simple VEX-Expressions that will overwrite these two particle attributes: @viscosity = 100; @density = 1000. Now you can make the fluid appear more viscous and dense.

In an original Anadol I believe that the driving forces are defined a little bit different. Since the particles in his artworks move a lot more sideways. This also fixes the problem that some particles get accelerated quite long under the influence of one bright region in the background video. As soon as particles leave the bright parts of the frame they will sink since attacking forces are weaker than the gravitational force. With a bit more patience and tweaking this could also be fixed.

Last but not least I added an outer physical box that has the inner perimeters of the fluid solvers bounding box. And eh voila! This already looks a lot like an original. The particles in Anadol's artworks have this slight glow to them, which means that they seem to emit light. This creates this mythical atmosphere. After some shading and material definitions you could render this out already.

I know this was kind of a quick walk-through, but I hope you still got the major concept of a Refik Anadol from the hallucination series now. Below you can find an example file of the Houdini pipeline. The AI videos for testing you need to aquire yourself, since I do not own any of them.

:-)

Sources:

[1] Aorist.art, [Online; accessed 21. Apr. 2023], Nov. 2021. [Online]. Available:

https://collect.aorist.art/artwork/machine-hallucinations-coral-dreams-66

[2] ES Art House, [Online; accessed 21. Apr. 2023], Apr. 2021. [Online]. Available: https://www.youtube.com/shorts/6GjIKQVLgNE

Also checkout:

https://www.koeniggalerie.com/blogs/exhibitions/refik-anadol-machine-hallucinations-nature-dreams

[1] Aorist.art, [Online; accessed 21. Apr. 2023], Nov. 2021. [Online]. Available:

https://collect.aorist.art/artwork/machine-hallucinations-coral-dreams-66

[2] ES Art House, [Online; accessed 21. Apr. 2023], Apr. 2021. [Online]. Available: https://www.youtube.com/shorts/6GjIKQVLgNE

Also checkout:

https://www.koeniggalerie.com/blogs/exhibitions/refik-anadol-machine-hallucinations-nature-dreams